Physical AI is the difference between an algorithm that predicts a machine failure and an intelligent robot that can physically inspect the machine, identify the fault, and adapt its operation to prevent a shutdown.

The Promise & Risks of Physical AI's Self-Optimizing Factories & Human-AI Teaming

Q&A with Mat Gilbert, Director, Head of AI & Data | Synapse, part of Capgemini Invent

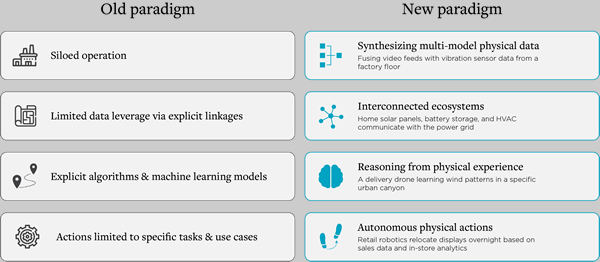

How does physical AI differentiate from the traditional automation and digital AI that many manufacturers are already familiar with in their Industry 4.0 initiatives?

Traditional automation, which has been the backbone of manufacturing for decades, is about programmed responses. It does best in highly structured, repetitive environments. Think of a classic robotic arm on an assembly line performing the same weld on every car chassis that passes. It’s deterministic and incredibly efficient, but it’s not intelligent. If a part is misaligned, the system typically stops or fails.

Digital AI, which has been the focus of most Industry 4.0 initiatives, is about digital analysis. It has been incredibly useful for tasks like predictive maintenance, supply chain optimization, and quality control analytics. These systems are excellent at processing vast amounts of data to find patterns and make predictions, but their output is typically a recommendation on a screen for a human to act on.

Physical AI is the next step. It provides autonomous reasoning and action, combining the analytical capabilities of digital AI with the ability to perceive, reason, and act directly in the physical world. Instead of being programmed for a specific function, a Physical AI system fuses together different types of data from its environment, like video feeds, and vibration sensor data, to make autonomous decisions and take actions it hasn’t been explicitly programmed to do. Physical AI is the difference between an algorithm that predicts a machine failure and an intelligent robot that can physically inspect the machine, identify the fault, and adapt its operation to prevent a shutdown.

How does physical AI translate into tangible benefits for manufacturing and factory automation, and what specific applications do you see it transforming within the industry?

The benefits of Physical AI in manufacturing range from immediate, tangible gains on the factory floor to long-term, strategic business model transformation. In our work, we categorize benefits into two primary value streams: Operational efficiency and business reinvention.

On the efficiency front, which is where most manufacturers will see the first wave of value, we’re moving beyond the capability of traditional automation. This can be seen in:

-

Intelligent workforce automation. Rather than replacing skilled workers, Physical AI acts as a force multiplier. We’re seeing this in applications where augmented reality, powered by AI, projects assembly instructions directly onto a workpiece. A technician sees the next step overlaid onto the physical object, and the AI uses cameras to verify that each component is placed correctly before they proceed, drastically reducing errors. In physically demanding roles, AI-powered robotics are improving safety and efficiency, helping human workers be safer, faster, and more accurate.

-

Dynamic process automation: Instead of automating a static, repetitive task, Physical AI enables adaptive robotics. As an example, in welding or finishing applications, parts coming from casts often have minor dimensional variations. A traditional robot would follow the same rigid path every time, resulting in inconsistent quality. An adaptive robot uses a 3D vision system to scan each unique part, allowing an AI model to generate a perfect tool path in real time. This ability to perceive and adapt to real-world variability brings a step change in quality and waste reduction. Similarly, in quality control we’re moving beyond simple vision systems that just flag defects. We now see autonomous robotics equipped with tactile or ultrasonic sensors that can perform complex, non-destructive testing on aerospace components.

Beyond immediate efficiency gains, the second, more profound benefit is for business reinvention. Physical AI allows manufacturers to shift from selling a static product, to providing a dynamic service. Consider a company that sells industrial pumps. Traditionally, this is a one-time capital sale. By embedding the pump with sensors and edge AI, it becomes an intelligent asset and can now predict its own maintenance needs, and more importantly, it can autonomously adjust its operating parameters to optimize for energy efficiency or to prolong its lifespan based on real-time conditions. This enables the manufacturer to sell an outcome, like guaranteed uptime, or optimized flow-as-a-service, creating a recurring revenue stream and a data advantage over competitors.

You point out how the rapid advancement of Physical AI is enabled by a convergence of technologies, including the decreased costs and increased capabilities of sensors, edge computing, and robotics. How are these advances making physical AI solutions more accessible and transformative for manufacturers today?

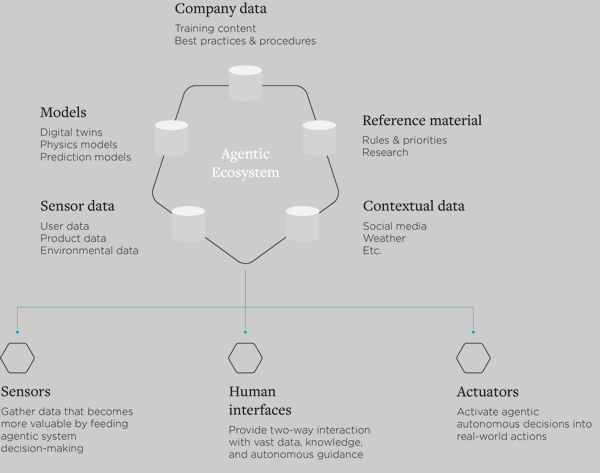

This convergence is why Physical AI is moving from research labs to the factory floor now. Each technology has matured to a point where combined, they enable valuable commercial deployments in industry.

Sensors enable the perception layer of a system. Cameras, LiDAR, vibration sensors, and similar, are increasing in sophistication and decreasing in cost, and they allow us to capture real-time data from unstructured industrial environments and stream it to AI models. This data allows the system to have true environmental awareness.

The increased capabilities of edge computing platforms allow AI inference to run directly on the device, which is essential for meeting the low-latency requirements of real time robotic control and ensuring systems can operate safely and reliably, which is critical in production environments.

Enabling Physical AI systems to take action in the physical world requires actuation and robotics. With physical platforms becoming increasingly capable and reducing in cost, bringing an AI model’s digital decisions into the real world is more accessible than ever.

Manufacturers can now deploy systems that are aware of their environment, responsive in real-time, and that are physically capable of performing complex tasks.

Your work highlights that physical AI operates under the unforgiving laws of physics, where actions can have "irreversible consequences," elevating safety to a foundational design principle. Drawing on these insights, especially the example of a warehouse robot's software update leading to a shelving unit collapse, what are the paramount safety considerations for manufacturers deploying physical AI systems, and how can they be effectively mitigated to ensure industrial-grade safety?

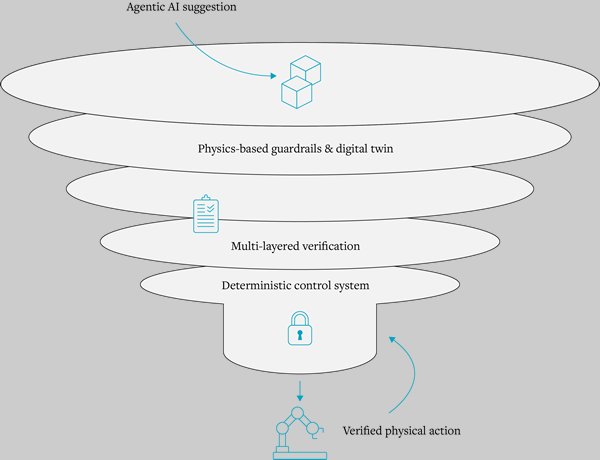

This is the most critical distinction between digital and physical AI. An error in a digital system, like a flawed data entry, can usually be corrected. In Physical AI, a software flaw can lead to irresistible consequences. For example, in a digital only context, an AI hallucination might produce an informational error. In a Physical AI system controlling a robotic arm, that same type of hallucination could lead to an unpredictable, dangerous, and potentially catastrophic physical action.

To ensure industrial grade safety, designing a multi-layered mitigation system is key. Individual approaches are specific to each deployment, but broadly the core of a safe system is ensuring the AI can’t command an action that is physically impossible or known to be unsafe. Before the AI’s proposed action is sent to the hardware, it’s first tested in a digital environment. If it violates a physical law or a predefined safety rule, like “never exceed a certain force”, the command is rejected immediately. Using a multi-layered verification approach means we’re not relying on the AI model alone. Additionally, passing commands through a series of deterministic checks, like sensor cross-validation and logic based sanity checks (e.g. is a person in the work cell?), provides additional layers of safety. From an architectural perspective, using a hybrid approach that utilizes the creative, probabilistic power of AI for high-level planning (“what is the most efficient way to stack these boxes?”), while delegating the execution of the movement to a deterministic, safety-focused control system that is firewalled from the AI allows for a system where the AI can suggest what to do, while the safety system has the final say on if it can be done safely.

How can manufacturers strategically integrate Physical AI agents into their operations to augment human capabilities, build trust with the workforce, and effectively manage potential bottlenecks in human-robot collaboration, particularly in complex, unstructured manufacturing environments?

The strategic goal should be to create “collective intelligence”, where collaboration between humans and AI agents achieves outcomes greater than either working in isolation. The focus should be on augmentation and human-AI teaming, not just supervision.

Building trust is incredibly important. The most effective way to do this is through a phased approach that empowers the workforce. It’s crucial to begin deploying AI as a supportive tool, giving employees access to AI powered systems that provide real time guidance, support, and safety monitoring. When workers see the technology as a companion that makes their job easier and safer, it builds a strong foundation of trust. Additionally, keeping humans in the loop by starting with systems that provide alerts and require human approval for actions allows workers to understand what the system is doing and why, building confidence in the decision making process while catching potential issues early. Using a collaborative design approach, where the human-machine interfaces are designed specifically for a manufacturing environment further improves adoption and reduces bottlenecks. This means moving beyond screens, to ruggedized, hands-free interfaces with voice controls or augmented reality displays that allow workers to interact with AI agents naturally and safely during their tasks.

By framing the integration as a partnership, manufacturers can ensure that Physical AI enhances the unique adaptability and problem solving skills of their people, turning potential bottlenecks into opportunities for collaboration.

(1).png)

Could you discuss one or two examples that demonstrate the current and near-future impact of Physical AI in advanced manufacturing and factory automation, showcasing its commercialization potential?

Two examples that are highlighted in our research demonstrate that Physical AI is already delivering significant commercial value.

The first is in advanced predictive maintenance. Gecko Robotics uses an AI-powered robotic platform for real time data acquisition on critical infrastructure in manufacturing abilities. Their robots move over boilers, tanks, and pipes, using sensors to gather data that would be impossible for humans to collect safely or comprehensively. The AI then analyzes this data to predict failures with high accuracy. This is a great example of Physical AI: a physical robot performing a complex task to gather data that a powerful AI model then uses to deliver value by reducing downtime and preventing failures.

The second is in automating logistics bottlenecks. Here the example is the collaboration between DHL Supply Chain and Boston dynamics, to deploy the “Stretch” robot. Trailer unloading is a physically punishing, high turnover job that has historically been very difficult to automate due to the unstructured nature of stacked boxes. Stretch uses a vision system and powerful AI model to identify and manipulate a wide variety of boxes in a real-world unloading environment. This directly addresses a major pain point by increasing the flow of goods into a facility while increasing employee safety.

Both of these examples show that Physical AI isn’t a future state technology. It’s being commercially deployed now to solve specific, high-value industrial problems that traditional automation couldn’t address.

To learn more about the work that Synapse does, visit the website here

To download the white paper “Physical AI: Where Intelligence Takes Shape” click here

.png) Mat Gilbert, Director, Head of AI & Data at Synapse, part of Capgemini Invent

Mat Gilbert, Director, Head of AI & Data at Synapse, part of Capgemini Invent

Mat is a distinguished technology leader at the forefront of new product development, integrating advanced AI, data analytics, and smart sensing technologies to create solutions that benefit both people and the planet. With a future-focused approach, Mat spearheads innovations that incorporate ethical and environmental considerations, working as a technical authority in the technology sector. His work not only expands the possibilities of technological advances but also ensures that these innovations are sustainable and human centric.

The content & opinions in this article are the author’s and do not necessarily represent the views of ManufacturingTomorrow

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product